Introduction

This document provides basic guidelines to show you how to configure the QNAP TS-x79 series Turbo NAS as the iSCSI datastore for VMware ESXi 5.0. The TS-x79 series Turbo NAS offers class-leading system architecture matched with 10 GbE networking performance designed to meet the needs of demanding server virtualization. With the built-in iSCSI feature, the Turbo NAS is an ideal storage solution for the VMware virtualization environment.

Recommendations

The following recommendations (illustrated in Figure 1) are provided for you to utilize the QNAP Turbo NAS as an iSCSI datastore in a virtualized environment.

- Create each iSCSI target with only one LUN

Each iSCSI target on the QNAP Turbo NAS is created with two service threads to deal with protocol PDU sending and receiving. If the target hosts multiple LUNs, the IO requests for these LUNs will be served by the same thread set, which results in data transfer bottleneck. Therefore, you are recommended to assign only one LUN to an iSCSI target.

- Use “instant allocation” to create iSCSI LUN

Choose “instant allocation” when creating an iSCSI LUN for higher read/write performance in an I/O intensive environment. Note that the creation time of the iSCSI LUN will be slower than that of a LUN created with “thin provisioning”.

- Store the operation system (OS) and the data of a VM on different LUNs

Have a VM datastore to store a VM with a dedicated vmnic (virtual network interface card) and map another LUN to the VM to store its data. Use another vmnic to connect to the data.

- Use multiple targets with LUNs to create an extended datastore to store the VMs

When a LUN is connected to the ESXi hosts, iSCSI will be represented as a single iSCSI queue on the NAS. When the LUN is shared among multiple virtual machine disks, all I/O has to serialize through the iSCSI queue and only one virtual disk’s traffic can traverse the queue at any point in time. This leaves all other virtual disks’ traffic waiting in line. The LUN and its respective iSCSI queue may become congested and the performance of the VMs may decrease. Therefore, you can create multiple targets with LUNs as an extended datastore to allow more iSCSI queues to deal with VMs access. In this practice, we will use four LUNs as an extended datastore in VMware.

- For normal datastore, limit the number of VMs per datastore to 10

If you just want to have one LUN as a datastore, you are recommended to implement no more than 10 virtual machines per datastore. The actual number of VMs allowed may vary depending on the environment.

Note:

Be careful the datastore shared by multiple ESX hosts VMFS is a clustered file system and uses SCSI reservations as part of its distributed locking algorithms. Administrative operations, such as creating or deleting a virtual disk, extending a VMFS volume, or creating or deleting snapshots, result in metadata updates to the file system using locks, and thus result in SCSI reservations. A reservation causes the LUN to be available exclusively to a single ESX host for a brief period of time, thus impacts the VM performance.

Deployment topology

The following items are required to deploy the Turbo NAS with VMware ESXi 5.0:

- One ESXi 5.0 host

- Three NIC ports on ESXi host

- Two Ethernet switches

- QNAP Turbo NAS TS-EC1279U-RP

Network configuration of ESXi host:

| vmnic | IP address/subnet mask | Remark |

| vmnic 0 | 10.8.12.28/23 | Console management (not necessary) |

| vmnic 1 | 10.8.12.85/23 | A dedicated interface for VM datastore |

| vmnic 2 | 168.95.100.101/16 | A dedicated interface for VM data LUN |

Network configuration of TS-EC1279U-RP Turbo NAS :

| Network Interface | IP address/subnet mask | Remark |

| Ethernet 1 | 10.8.12.125/23 | A dedicated interface for VM datastore |

| Ethernet 2 | 168.95.100.100/16 | A dedicated interface for VM data LUN |

| Ethernet 3 | Not used in this demonstration | |

| Ethernet 4 | Not used in this demonstration |

iSCSI configuration of TS-EC1279U-RP Turbo NAS:

| iSCSI Target | iSCSI LUN | Remark |

| DataTarget | DataLUN | To store VM data |

| VMTarget1 | VMLUN1 | For the extended VM datastore |

| VMTarget2 | VMLUN2 | For the extended VM datastore |

| VMTarget3 | VMLUN3 | For the extended VM datastore |

| VMTarget4 | VMLUN4 | For the extended VM datastore |

Switches

| Switch | Port | Remark |

| A | 0 | To connect to Ethernet 1 of the Turbo NAS |

| A | 1 | To connect to vmnic 1 of the ESXi server |

| B | 0 | To connect to Ethernet 2 of the Turbo NAs |

| B | 1 | To connect vmnic 2 of the ESXi server |

Note: The iSCSI adapters should be on a private network.

Implementation

Configure the network settings of the Turbo NAS

Login the web administration page of the Turbo NAS. Go to “System Administration” > “Network” > “TCP/IP”. Configure standalone network settings for Ethernet 1 and Ethernet 2.

- Ethernet 1 IP: 10.8.12.125

- Ethernet 2 IP: 168.95.100.100

Note: Enable “Balance-alb” bonding mode or 802.3ad aggregation mode (an 802.3ad compliant switch required) to allow inbound and outbound traffic link aggregation.

Create iSCSI targets with LUNs for the VM and its data on the NAS

Login the web administration page of the Turbo NAS. Go to “Disk Management” > “iSCSI” > “Target Management” and create five iSCSI targets, each with a instant allocation LUN (see Figure 6). VMLUNs (1-4) will be merged as an extended datastore to store your VM. DataLUN, 200 GB with instant allocation, will be used as the data storage for the VM.

For the details of creating iSCSI target and LUN on the Turbo NAS, please see the application note “Create and use the iSCSI target service on the QNAP NAS” on http://www.qnap.com/en/index.php?lang=en&sn=5319. Once the iSCSI targets and LUNs have been created on the Turbo NAS, use VMware vSphere Client to login the ESXi server.

Configure the network settings of the ESXi server

Run VMware vSphere Client and select the host. Under “Configuration” > “Hardware” > “Networking”, click “Add Networking” to add a vSwitch with a VMkernal Port (VMPath) for the VM datastore connection. The VM will use this iSCSI port to communicate with the NAS. The IP address of this iSCSI port is 10.8.12.85. Then, add another vSwitch with a VMkernal Port (DataPath) for the data connection of the VM. The IP address of this iSCSI port is 168.95.100.101.

Enable iSCSI software adapter in ESXi

Select the host. Under “Configuration” > “Hardware” > “Storage Adapters”, select “iSCSI Software Adapter”. Then click “Properties” in the “Details” panel.

Click “Configure” to enable the iSCSI software adapter.

Bind the iSCSI ports to the iSCSI adapter

Select the host. Under “Configuration” > “Hardware” > “Storage Adapters”, select “iSCSI Software Adapter”. Then click “Properties” in the “Details” panel. Go to the “Network Configuration” tab and then click “Add” to add the VMkernel ports: VMPath and VMdata.

Connect to the iSCSI targets

Select the host. Under “Configuration” > “Hardware” > “Storage Adapters”, select “iSCSI Software Adapter”. Then click “Properties” in the “Details” panel. Go to the “Dynamic Discovery” tab and then click “Add” to add one of your NAS IP address (10.8.12.125 or 168.95.100.100). Then click “Close” to rescan the software iSCSI bus adapter.

After rescanning the software iSCSI bus adapter, you can see the connected LUN in the “Details” panel.

Select the preferred path for each LUN

Right click each VMLUNs (1-4) and click “Manage Path…” to specify their paths.

Change Path Selection to “Fixed (VMware)”.

Then, select the dedicate path (10.8.12.125) and click “Preferred” to set the path for the VM connection.

Repeat the above steps to set up the preferred path (168.95.100.100) for DataLUN (200 GB).

Create and enable a datastore

Once the iSCSI targets have been connected, you can add your datastore on a LUN. Select the host. Under “Configuration” > “Hardware” > “Storage”, click “Add storage…” and select one of the VMLUNs (1 TB) to enable the new datastore (VMdatastore). After a few seconds, you will see the datastore in the ESXi server.

Merge other VMLUNs to the datastore

Select the host. Under “Configuration” > “Hardware” > “Storage”, right click VMdatastore and click “Properties”.

Click “Increase”.

Select the other three VMLUNs and merge them as a datastore.

After all the VMLUNs are merged, the size of the VMdatastore will become 4 TB.

Create your VM and store it in the VM datastore

Right click the host to create a new virtual machine, select VMdatastore as its destination storage. Click “Next” and follow the wizard to create a VM.

Attach the DataLUN to the VM

Right click the VM that you just created, click “Edit Settings…” to add a new disk. Then, click “Next”.

Select “Raw Device Mappings” (RDM) and click “Next”.

Select DataLUN (200 GB) and click “Next”. Follow the wizard to add a new hard disk.

After a few seconds, a new hard disk will be added on your VM.

Your VM is ready to use

All the VM settings have been finished. Now start the VM and install your applications or save the access data in the RDM disk. If you wish to create another VM, please save the second VM to VMdatastore and create a new LUN on the Turbo NAS for its data access.

QNAP RAID System Errors & How To Fix

I – Introduction

II – How to Fix if RAID seems “In Degreed”

III – How to Fix if RAID seems “In Degreed Mode, Read Only, Failed Drive(s) x” Cases;

IV – How to Fix if RAID Becomes “Unmounted” or “Not Active”

V – “Recover” Doesnt Work, How to Fix if RAID Becomes “Unmounted” or “Not Active”

VI – Stuck at Booting / Starting Sevices, Please Wait / Cant Even Login Qnap Interface

VII – IF “config_util 1″ Command gives “Mirror of Root Failed” Eror;

VIII – How to Fix “Broken RAID” Scenario

IX – If None of These Guides Works;

..

I – Introduction;

Warning : This documents are recomended for Professional users only. If you dont know what you’r doing, you may damage your RAID which cause loosing data. Qnapsupport Taiwan works great to solve this kind of RAID corruptions easly, and My advice is directly contact with them at this kind of cases.

1 – If documetn says “Plug out HDD and Plug in” always use original RAID HDD at the same slot. Dont use new HDDs!

2 – If you can access your data after these process, backup them quickly.

3 – You can loose data on Hardware RAID devices and other NAS brands, but nearly you cant loose data on Qnap, but recovering your datas may cause time lost, so Always double backup recomended for future cases.

4 – If one of your HDD’s cause Qnap to restart itself when you plug in to NAS, Dont use that HDD to solve these kind of cases.

This document is not valid for Qnap Ts-109 / Ts-209 / Ts-409 & Sub Models.

..

II -How to Fix if RAID seems “In Degreed”

If your RAID system seems as down below, use this document. If not, Please dont try anything in this document:

In this case, RAID information seems “RAID 5 Drive 1 3″ so, your 2.th HDD is out of RAID. Just plug out broken HDD from 2.Th HDD slot, wait around 15 seconds, and Plug in new HDD with the same size.

..

III -How to Fix if RAID seems “In Degreed Mode, Read Only, Failed Drive(s) x” Cases;

If your system seems In Degraded, Failed Drive X, you probably loose more HDD than RAID tolerated, so;

1 – Take your Backup,

2 – Re-Install Qnap From Begining.

Qnap data protection features doesnt let you loose data even if your 5 HDD gives Bad sector errors in 6 HDD RAID Systems.

..

IV – How to Fix if RAID Becomes “Unmounted” or “Not Active”

If your RAID system seems as the picture down below, fallow this document. If not, Please dont try anything in this document:

1 – Update your Qnap fimware with Qnapfinder 3.7.2 or higher firmware. Just go to Disk Managment -> RAID managment. Choose your RAID and press “Recover” to fix.

If this doesnt work and “Recover” button is still avaible, just fullow these steps;

1 – While device is still working, Plug out HDD that you suspect which maybe broken, and press Recover button again.

Plug out Broken HDD, which lights Shines RED, or which HDD seems “Normal” / “Abnormal” / “Read-Write Error”

On HDD information Screen, than Press Recover. If doesnt work, Plug in HDD again, And Press Recover once Again

2 – If you plug out HDD —> then Plug it in the same slot again —> Press “Recover” Once Again and it should repair RAID;

3 – Here is log files;

4 – And RAID systems comes back with “Indegreed Mode”, so just quickly backup your datas, and Reinstall Qnap without broken HDD again.

We loose 2 HDD from RAID 5, so its impossable to fix this RAID again. Just Re-install.

..

V – “Recover” Doesnt Work, How to Fix if RAID Becomes “Unmounted” or “Not Active”

1 – Download Putty & login Qnap,

2 – Make sure the raid status is active

To understant, type :

#more /proc/mdstat

Also If you want to Stop Running Services;

# /etc/init.d/services.sh stop

Unmount the volume:

# umount /dev/md0

Stop the array:

# mdadm -S /dev/md0

Now Try This command

For RAID 5 with 4 HDD’s

mdadm -CfR –assume-clean /dev/md0 -l 5 -n 4 /dev/sda3 /dev/sdb3 /dev/sdc3 /dev/sdd3

-l 5 : means RAID 5. If its RAID 6, try -l 6;

-n 4 means number of your HDDs, If you have 8 HDD, try –n 8

/dev/sda3 means your first HDD.

/dev/sdd3 means your 4.th HDD

Example;

If you have RAID 6 with 8 HDD, change command line with this;

mdadm -CfR –assume-clean /dev/md0 -l 6 -n 8 /dev/sda3 /dev/sdb3 /dev/sdc3 /dev/sdd3 /dev/sde3 /dev/sdf3 /dev/sdg3 /dev/sdh3

If one of your HDD has Hardware Error, which cause Qnap restart itself, plug out that HDD, and type “missing” command for that HDD;

Example, if your 2.th HDD is broken, use this command;

# mdadm -CfR –assume-clean /dev/md0 -l 5 -n 4 /dev/sda3 missing /dev/sdc3 /dev/sdd3

3 – try manually mount

# mount /dev/md0 /share/MD0_DATA -t ext3

# mount /dev/md0 /share/MD0_DATA -t ext4

# mount /dev/md0 /share/MD0_DATA -o ro (read only)

4 – So, Result Should be Like That;

..

VI – Stuck at Booting / Starting Sevices, Please Wait / Cant Even Login Qnap Interface

Just Plug out broken HDD and restart Qnap again. This should restarts Qnap back again.

If you’r not sure which HDD is broken, Please follw this steps;

1 – Power Off the NAS.

2 – Plug out All HDDs,

3 – Start Qnap without HDDs,

4 – Qnapfinder Should find Qnap in a few minutes. Now, Plug in all HDD’s back again same slots.

5 – Download Putty from this link and login with admin / admin usernam / password;

http://www.chiark.greenend.org.uk/~sgta … nload.html

6 – Type this command lines which I marked blue;

# config_util 1 -> If result of this command give “Root Failed” dont go on and contact with Qnapsupport team

# storage_boot_init 1 ->

# df -> IF dev/md9 (HDA_ROOT) seems full, please contact with Qnapsupport.

(Now, you can Reboot your device with this command. ıf you want to reset your configration, please skip this.)

# reboot

..

VII – IF “config_util 1″ Command gives “Mirror of Root Failed” Eror;

1 – Power Off the NAS.

2 – Plug out All HDDs,

3 – Start Qnap without HDDs,

4 – Qnapfinder Should find Qnap in a few minutes. Now, Plug in all HDD’s back again same slots.

5 – Download Putty from this link and login with admin / admin usernam / password;

http://www.chiark.greenend.org.uk/~sgta … nload.html

6 – Type this command lines which I marked blue;

# storage_boot_init 2 -> (this time type storage_boot_init 2, not storage_boot_init 1)

This command should turn Qnap back to last “Indegreed” Mode and you should get this kind of information after this command:

7 – Download Winscp and Login Qnap. Go to ->share ->MD0_DATA folder, and backup your datas Quickly.

..

VIII – How to Fix “Broken RAID” Scenario

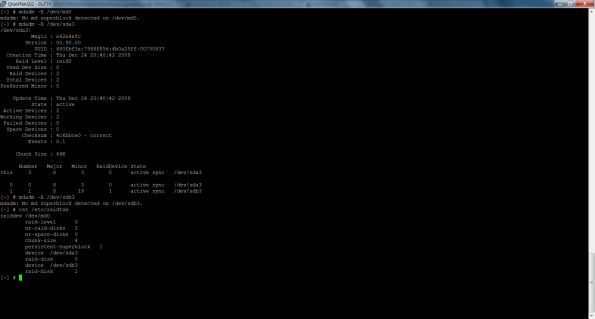

First, I try to fix “Recovery” method, but doesnt work. At Qnap RAID managment menu, I check All HDDs, But all of them seems good. So I Login with Putty, and type these commands;

mdadm -E /dev/sda3

mdadm -E /dev/sdb3

mdadm -E /dev/sdc3

mdadm -E /dev/sdd3

Except first HDD, other 3 HDD’s doesn have md superblock; Also I try “config_util 1” & “storage_boot_init 2” commands, but both of them gives error;

Costumer got RAID 5 (-l 5) with 4 HDD (-n 4), so ı type this command;

# mdadm -CfR –assume-clean /dev/md0 -l 5 -n 4 /dev/sda3 /dev/sdb3 /dev/sdc3 /dev/sdd3

then mount with this command;

# mount /dev/md0 /share/MD0_DATA -t ext4

And works perfect.

Also here is Putty Steps;

login as: admin

admin@192.168.101.16′s password:

[~] # mdadm -E /dev/sda3

/dev/sda3:

Magic : a92b4efc

Version : 00.90.00

UUID : 2d2ee77d:045a6e0f:438d81dd:575c1ff3

Creation Time : Wed Jun 6 20:11:14 2012

Raid Level : raid5

Used Dev Size : 1951945600 (1861.52 GiB 1998.79 GB)

Array Size : 5855836800 (5584.56 GiB 5996.38 GB)

Raid Devices : 4

Total Devices : 4

Preferred Minor : 0

Update Time : Fri Jan 11 10:24:40 2013

State : clean

Active Devices : 4

Working Devices : 4

Failed Devices : 0

Spare Devices : 0

Checksum : 8b330731 – correct

Events : 0.4065365

Layout : left-symmetric

Chunk Size : 64K

Number Major Minor RaidDevice State

this 0 8 3 0 active sync /dev/sda3

0 0 8 3 0 active sync /dev/sda3

1 1 8 19 1 active sync /dev/sdb3

2 2 8 35 2 active sync /dev/sdc3

3 3 8 51 3 active sync /dev/sdd3

[~] # mdadm -E /dev/sdb3

mdadm: No md superblock detected on /dev/sdb3.

[~] # mdadm -CfR –assume-clean /dev/md0 -l 5 -n 4 /dev/sda3 /dev/sdb3 /dev/sdc3 /dev/sdd3

mdadm: /dev/sda3 appears to contain an ext2fs file system

size=1560869504K mtime=Fri Jan 11 10:22:54 2013

mdadm: /dev/sda3 appears to be part of a raid array:

level=raid5 devices=4 ctime=Wed Jun 6 20:11:14 2012

mdadm: /dev/sdd3 appears to contain an ext2fs file system

size=1292434048K mtime=Fri Jan 11 10:22:54 2013

mdadm: array /dev/md0 started.

[~] # more /proc/mdstat

Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [multipath]

md0 : active raid5 sdd3[3] sdc3[2] sdb3[1] sda3[0]

5855836800 blocks level 5, 64k chunk, algorithm 2 [4/4] [UUUU]

md4 : active raid1 sda2[2](S) sdd2[0] sdc2[3](S) sdb2[1]

530048 blocks [2/2] [UU]

md13 : active raid1 sda4[0] sdc4[3] sdd4[2] sdb4[1]

458880 blocks [4/4] [UUUU]

bitmap: 0/57 pages [0KB], 4KB chunk

md9 : active raid1 sda1[0] sdc1[3] sdd1[2] sdb1[1]

530048 blocks [4/4] [UUUU]

bitmap: 1/65 pages [4KB], 4KB chunk

unused devices:

[~] # mount /dev/md0 /share/MD0_DATA -t ext4

[~] #

Here is Result;

..

IX – If None of These Guides Works;

For rarely, I cant solve these kind of problems on Qnap. and sometimes I plug HDD’s to another Qnap to solve these kind of problems.

User different Qnap which has different hardware to solve this kind of cases!;

When you plug HDD, and restart device, Qnap will ask you to to Migrate or Reinstall Qnap, choose “Migrate” Option.

In this case, Qnap coulnt boot at Ts-659 Pro, putty commands doesnt work and we cant even enter Qnap admin interface;

How To Fix HDD Based Problems With Another Qnap

HDD’s couldnt boot from Ts-659 Pro, but after installing different device (At this case, I plug them to Ts-509 Pro) device boots fine. But of course you must use 6 or more HDD supported Qnap to save your datas.

So, If evertything fails, Just try these process with another Qnap.

Obtained from this link

If you cannot access the NAS after Step 3, please do the following:

- Turn off the NAS.

- Take out all the hard disk drives.

- Restart the NAS.

You will hear a beep after pressing the power button, followed by 2 beeps 2 minutes later. If you cannot hear first beep, Please contact your local reseller or distributor for repair or replacement service.

If you cannot Hear the two beeps, and Qnapfinder couldnt find your NAS, the NAS Firmware is Damaged. To fix this problem, please follow “Qnap firmware Recovery / Reflash” Documents for your device model.

If you couldnt solve problem by yourself, Please contact your local reseller or distributor for repair or replacement service

If Qnapfinder can find Qnap, fallow these steps;

1 – Download Putty software;

http://www.chiark.greenend.org.uk/~sgtatham/putty/download.html

2 – Plug in all of your HDD’s with right order while device is still working. Dont restart Qnap yet. Check if all HDD’s are allright and recognized by Qnap. If any of HDD doesnt recovnized or size seems “0″, plug out that HDD.

3 –Log with putty by entering the Qnap IP / user name / password. (Username / Password: admin / admin. Port need to enter 22.)

Now enter these command down below; (Choose command from this screen and “copy” Then go to putty, just pr “pess right mouse button once. By this way, you can paste commands automaticly)

# config_util 1 -> (it must say “mirror of root succeed”. if it gives “mirror of root failed” error, stop this step and request help from Qnapsupport.)

# storage_boot_init 1

# df

If dev/md9 (HDA_ROOT) appears full, please contact QNAP support team

# reboot

Now Qnap should reboot well. If you can reach Qnap interface after restart, check RAID system, and change broken HDD with a new one.

Obtained from this link

“No server downtime when you need to replace the RAID drives”

Contents

- Logical volume status when the RAID operates normally

- When a drive fails, follow the steps below to check the drive status:

- Install a new drive to rebuild RAID 5 by hot swapping

Procedure of hot-swapping the hard drives when the RAID crashes

RAID 5 disk mirroring provides highly secure data protection. You can use two hard drives of the same capacity to create a RAID 5 array. The RAID 5 creates an exact copy of data on the member drives. RAID 5 protects data against single drive failure. The usable capacity of RAID 5 is the capacity of the smallest member drive. It is particularly suitable for personal or company use for important data saving.

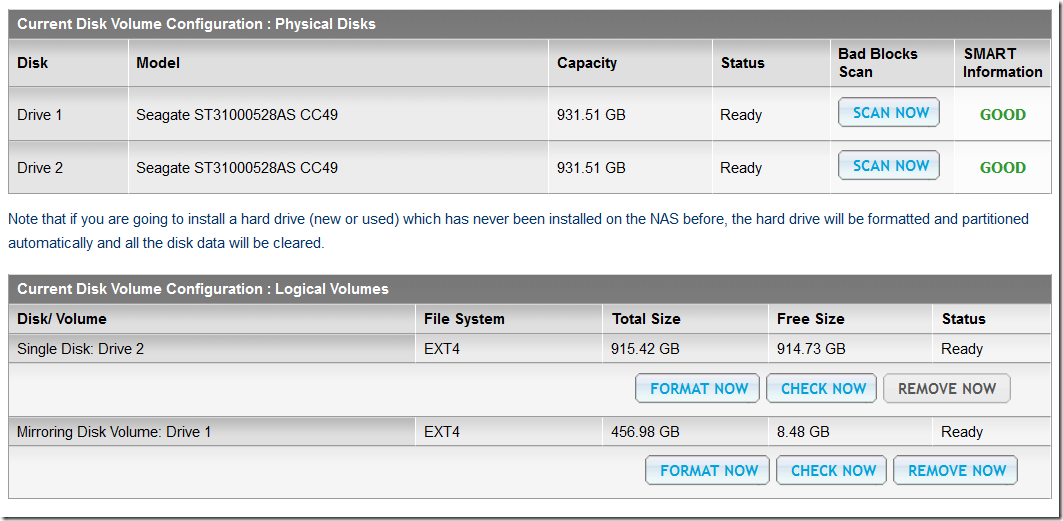

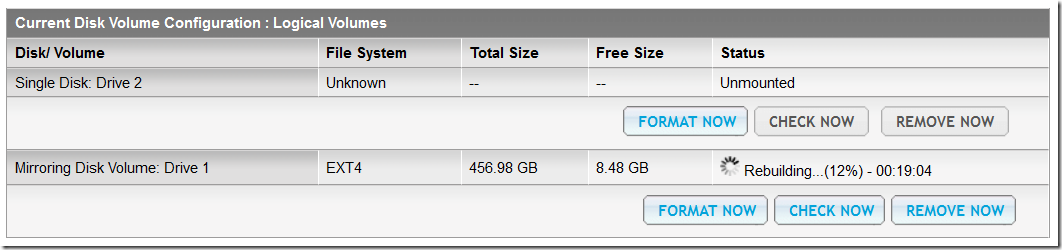

Logical volume status when the RAID operates normally

When the RAID volume operates normally, the volume status is shown as Ready under ‘Disk Management’ > ‘Volume Management’ section.

When a drive fails, follow the steps below to check the drive status:

When RAID volume operates normally, the volume status is shown as Ready in the Current Disk Volume Configuration section.

- The server beeps for 1.5 sec twice when the drive fails.

- The Status LED flashes red continuously.

- Check the Current Disk Volume Configuration section. The volume status is In degraded mode.

You can check the error and warning messages for drive failure and disk volume in degraded mode respectively in the Event Logs.

|

Note: You can send and receive alert e-mail by configuring the alert notification. For the settings, please refer to the System Settings/ Alert Notification section in the user manual. |

Install a new drive to rebuild RAID 5 by hot swapping

Please follow the steps below to hot swap the failed hard drive:

- Prepare a new hard drive to rebuild RAID 5. The capacity of the new drive should be at least the same as that of the failed drive.

- Insert the drive to the drive slot of the server. The server beeps for 1.5 seconds twice. The Status LED flashes red and green alternatively.

- Check the Current Disk Volume Configuration section. The volume status is Rebuilding and the progress is shown.

- When the rebuilding is completed, the Status LED lights in green and the volume status is Ready. RAID 5 mirroring protection is now active.

- You can check the disk volume information in the Event Logs.

|

Note: Do not install the new drive when the system is not yet in degraded mode to avoid unexpected system failure. |

Madrid, 6 de junio de 2013 – ESET España, líder en protección proactiva contra todo tipo de amenazas de Internet con más de 25 años de experiencia, lanza la nueva versión de su producto de protección para correo corporativo ESET NOD32 Mail Security, ahora compatible con Microsoft Windows Server 2013 y con Microsoft Exchange Server 2013.

ESET NOD32 Mail Security para Microsoft Exchange Server combina una protección antivirus y antispam eficaz que garantiza el filtrado de todo el contenido malicioso en el correo electrónico a nivel del servidor. Ofrece protección total del servidor, incluyendo el propio sistema de archivos. Permite a los administradores de red aplicar políticas para contenidos específicos en función del tipo de archivos y gestionar el estado de la seguridad antivirus o ajustar su configuración con la herramienta de gestión centralizada y remota ESET NOD32 Remote Administrator.

La nueva versión de ESET NOD32 Mail Security incluye, entre otras, las siguientes novedades:

- Compatible con Microsoft Windows Server 2012 y con Microsoft Exchange Server 2013.

- Configuración completa de la función antispam desde la interfaz de producto.

- eShell: ejecuta scripts para establecer la configuración o ejecutar una acción. eShell (ESET Shell) es una herramienta de interfaz por línea de comandos totalmente nueva. Además de todas las funciones y características accesibles desde la GUI (Interfaz gráfica del usuario), eShell permite automatizar la gestión de los productos de seguridad ESET.

- Fácil y sencilla migración desde versiones anteriores: facilita al máximo el cambio a la nueva versión sin afectar al rendimiento del parque informático.

Otras características clave y beneficios

- Protección antivirus y antiespía: analiza todos los correos entrantes y salientes a través de los protocolos POP3, SMTP e IMAP. Filtra las amenazas en el correo electrónico, incluso el spyware a nivel de la puerta de enlace. Proporciona todas las herramientas para la seguridad total del servidor, incluyendo la protección residente y el análisis bajo demanda. Además, está equipado con la avanzada tecnología Threatsense® que combina velocidad, precisión y un mínimo consumo de recursos contra todo tipo de amenazas de Internet, como rootkits, gusanos y virus.

- Protección antispam: bloquea el correo no deseado y el phishing con un alto nivel de detección. El motor antispam mejorado le permite definir diferentes niveles antispam con mayor precisión. Toda la configuración del antispam está totalmente integrada en la interfaz del producto. Permite al administrador aplicar políticas para ciertos tipos de contenido en los documentos adjuntos del correo electrónico e incluye una nueva tecnología que evita que las soluciones de seguridad ESET sean modificadas o desactivadas por el malware.

- Registros e informes: permite mantenerse al día del estado de su seguridad antivirus con registros y estadísticas exhaustivos. En cuanto a los registros de spam, muestra el remitente, el destinatario, el nivel de spam, el motivo de la clasificación como spam y la acción tomada. Permite controlar el rendimiento del servidor en tiempo real.

- Fluidez: excluye automáticamente del análisis los archivos críticos del servidor (incluyendo las carpetas de Microsoft Exchange). El administrador de licencias incorporado fusiona automáticamente dos o más licencias registradas a nombre del mismo cliente. El nuevo instalador permite mantener toda la configuración al actualizar desde una versión anterior (4.2 o 4.3), y el control por línea de comandos eShell permite al administrador ejecutar scripts para realizar acciones o crear/modificar configuraciones.

ESET Mail Security para Microsoft Exchange Server está disponible para su prueba a través de la página web de ESET y se puede adquirir a través de la red de distribuidores de ESET NOD32 España o bien a través de su tienda online.

Obtenido de este enlace

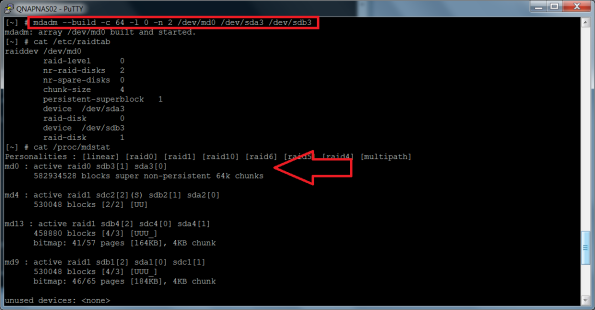

Scenario = replace a disk in QNAP TS-212 with RAID 1 configuration active

RAID rebuild should start automatically, but some times it could happen you got stuck with 1 Single Disk + 1 Mirroring Disk Volume:

According to the QNAP Support – How can I migrate from Single Disk to RAID 0/1 in TS-210/TS-212? , TS-210/TS-212 does not support Online RAID Level Migration. Therefore, please backup the data on the single disk to another location, install the second hard drive, and then recreate the new RAID 0/1 array (hard drive must be formatted).

The workaround is this:

- Telnet to NAS as Admin

- Check your current disk configuration for Disk #1 and Disk #2 =

fdisk -l /dev/sda

Disk /dev/sdb: 1000.2 GB, 1000204886016 bytes

255 heads, 63 sectors/track, 121601 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytesDevice Boot Start End Blocks Id System

/dev/sdb1 1 66 530125 83 Linux

/dev/sdb2 67 132 530142 83 Linux

/dev/sdb3 133 121538 975193693 83 Linux

/dev/sdb4 121539 121600 498012 83 Linuxfdisk -l /dev/sdb

Disk /dev/sda: 1000.2 GB, 1000204886016 bytes

255 heads, 63 sectors/track, 121601 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytesDevice Boot Start End Blocks Id System

/dev/sda1 1 66 530125 83 Linux

/dev/sda2 67 132 530142 83 Linux

/dev/sda3 133 121538 975193693 83 Linux

/dev/sda4 121539 121600 498012 83 Linux - SDA is the first disk, SDB is the second disk

- Verify the current status of RAID with this command =

mdadm –detail /dev/md0

/dev/md0:

Version : 00.90.03

Creation Time : Thu Sep 22 21:50:34 2011

Raid Level : raid1

Array Size : 486817600 (464.27 GiB 498.50 GB)

Used Dev Size : 486817600 (464.27 GiB 498.50 GB)

Raid Devices : 2

Total Devices : 1

Preferred Minor : 0

Persistence : Superblock is persistentIntent Bitmap : InternalUpdate Time : Thu Jul 19 01:13:58 2012

State : active, degraded

Active Devices : 1

Working Devices : 1

Failed Devices : 0

Spare Devices : 0UUID : 72cc06ac:570e3bf8:427adef1:e13f1b03

Events : 0.1879365Number Major Minor RaidDevice State

0 0 0 0 removed

1 8 3 1 active sync /dev/sda3 - As you can see the /dev/sda3 is working, so disk #1 is OK, but disk #2 is missing from RAID

- Check if Disk #2 /dev/sdb is mounted (it should be) =

mount/proc on /proc type proc (rw)

none on /dev/pts type devpts (rw,gid=5,mode=620)

sysfs on /sys type sysfs (rw)

tmpfs on /tmp type tmpfs (rw,size=32M)

none on /proc/bus/usb type usbfs (rw)

/dev/sda4 on /mnt/ext type ext3 (rw)

/dev/md9 on /mnt/HDA_ROOT type ext3 (rw)

/dev/md0 on /share/MD0_DATA type ext4 (rw,usrjquota=aquota.user,jqfmt=vfsv0,user_xattr,data=ordered,delalloc,noacl)

tmpfs on /var/syslog_maildir type tmpfs (rw,size=8M)

/dev/sdt1 on /share/external/sdt1 type ufsd (rw,iocharset=utf8,dmask=0000,fmask=0111,force)

tmpfs on /.eaccelerator.tmp type tmpfs (rw,size=32M)

/dev/sdb3 on /share/HDB_DATA type ext3 (rw,usrjquota=aquota.user,jqfmt=vfsv0,user_xattr,data=ordered,noacl)

- Dismount the /dev/sdb3 Disk #2 with this command =

umount /dev/sdb3 - Add Disk #2 into the RAID /dev/md0 =

mdadm /dev/md0 –add /dev/sdb3

mdadm: added /dev/sdb3 - Check the RAID status and the rebuild should be started automatically =

mdadm –detail /dev/md0

/dev/md0:

Version : 00.90.03

Creation Time : Thu Sep 22 21:50:34 2011

Raid Level : raid1

Array Size : 486817600 (464.27 GiB 498.50 GB)

Used Dev Size : 486817600 (464.27 GiB 498.50 GB)

Raid Devices : 2

Total Devices : 2

Preferred Minor : 0

Persistence : Superblock is persistentIntent Bitmap : InternalUpdate Time : Thu Jul 19 01:30:27 2012

State : active, degraded, recovering

Active Devices : 1

Working Devices : 2

Failed Devices : 0

Spare Devices : 1Rebuild Status : 0% complete

UUID : 72cc06ac:570e3bf8:427adef1:e13f1b03

Events : 0.1879848Number Major Minor RaidDevice State

2 8 19 0 spare rebuilding /dev/sdb3

1 8 3 1 active sync /dev/sda3 - Check the NAS site for the rebuild % progress

- After the RAID rebuild complete, restart NAS to clean all previous mount point folder for sdb3

Obtained from this link

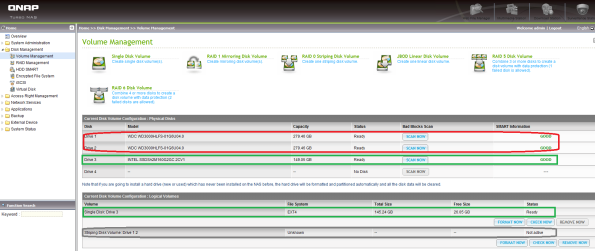

Something like four weeks ago I had a major issue with my QNAP TS-459 Pro storage device. I did a simple firmware upgrade and whoop my RAID0 volume was gone, oops!

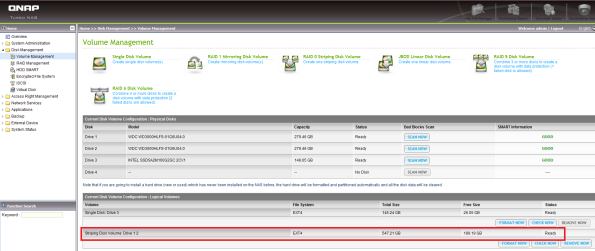

So I was left with three stand alone disks as shown on the screenshot above. The two WDC VelociRaptor disks were supposed to form a single RAID0 volume, well it used to be just before the firmware upgrade…

As an old saying goes, if your data is important backup once, if your data is critical backup twice. My data is important and I had everything rsync’ed on another QNAP TS-639 Pro storage device but still it is pain in the a***. By the way did you know that with the latest beta firmware, your QNAP device can copy your data to a SaaS (Storage as a Service) provider in the Cloud, cool isn’t it ![]()

It took me some time to recover my broken RAID0 volume and many trial and errors. Hopefully I had a backup and a lot of spare time thus I could play around with the device. Fist thing I tried is a restore of the latest known working backup of my QNAP storage device. The restore process went flawlessly but upon reboot, I had the same problem, my RAID0 volume was still gone.

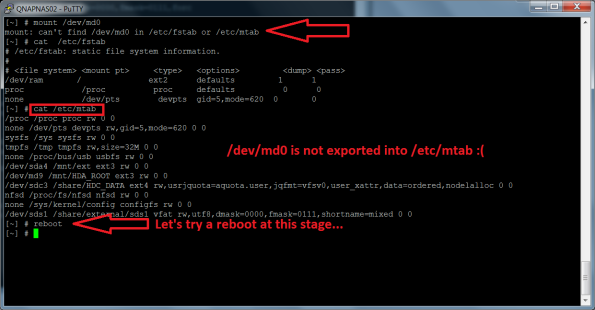

I SSH’ed in the QNAP device and triggered some mdadm commands as shown on the screenshot below.

mdadm -E /dev/md0 confirmed the issue, no RAID0 volume even though I did a restore of the QNAP’s configuration settings.

Whilst mdadm -E /dev/sda3 showed me that a superblock was available for /dev/sda3, that wasn’t the case for /dev/sdb3. That’s not good at all ![]()

/proc/mdstat confirmed that the restore was useless, no /dev/md0 declared in that configuration file…

Well the restore was partially helpful. Look at the screenshots above, in the logical volumes panel, you see that a stripping volume containing disk 1 and 2 was declared but not active. And on the second screenshot, the striping disk volume was unmounted as a result.

I tried to re-build the stripping configuration with the command: mdadm –build c 64 -l 0 -n 2 /dev/md0 /dev/sda3 /dev/sdb3 and /dev/md0 was successfully appended to /proc/mdstat

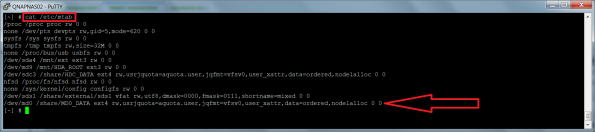

I tried a mount /dev/md0 but that did not work out. I checked /etc/mtab for /dev/md0 but could not find it. It was not exported as I would expect… At this stage I decided that a reboot was necessary…

The device was back up and I checked in the Volume Management panel for the status of the striping volume and it was still not active but this time the File System column showed EXT3. The Check Now button might help to recover the striping volume to a healthy state, let’s try that and indeed it was a success as shown on the two screenshots below. The Check Now button fixed the /dev/md0 entry in /etc/mtab and the status was now as active. Hurray!

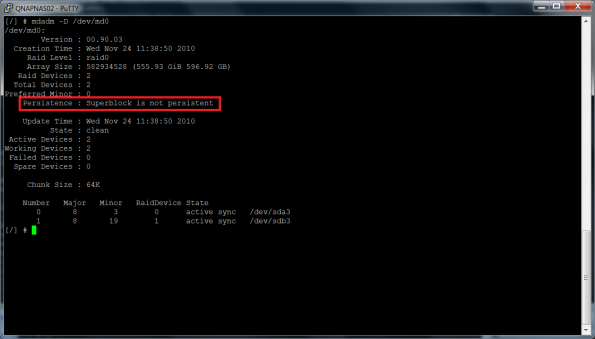

Unfortunately the happiness was short because as soon the QNAP device rebooted, the striping volume configuration settings were gone. Actually I could see that the superblock, that is the portion of the disks part of a RAID set, where the parameters that define a software RAID volume, was not persistent, that is was not written in a superblock as shown in the screenshot below.

I had to face it, I would not be able to recover my striping disk volume thus I decided that it was time to re-create it from scratch and copy back my data. So I cleared any RAID volume settings from the device and while at it, I decided to evaluate the sweet spot of the chunk size especially for a RAID0 volume.

That reminds me that even if technology is getting better and better, still it is not error free and shit happens. Hopefully no data was lost for good and I could get my home lab back up with an even faster RAID0 volume as before the crash, thanks to the new chunk size ![]()

Obtained from this link

QNAP comandos útiles con ejemplos

more /proc/mdstat

fdisk -l

mdadm -E /dev/sda3

mdadm -E /dev/sdb3

mdadm -E /dev/sdc3

mdadm -E /dev/sdd3

Para 8 discos seguimos con mdadm -E /dev/sde3 , mdadm -E /dev/sdf3 , mdadm -E /dev/sd3 , mdadm -E /dev/sdh3 , y si son más, seguimos.

mdadm -D /dev/md0

cat /etc/raidtab

Ejemplos:

Con problemas, el raid 5 de 4 discos no aparece

[~] # more /proc/mdstat

Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [multipath]

md4 : active raid1 sdd2[2](S) sdc2[1] sda2[0]

530048 blocks [2/2] [UU]

md13 : active raid1 sda4[0] sdd4[2] sdc4[1]

458880 blocks [4/3] [UUU_]

bitmap: 40/57 pages [160KB], 4KB chunk

md9 : active raid1 sda1[0] sdd1[2] sdc1[1]

530048 blocks [4/3] [UUU_]

bitmap: 39/65 pages [156KB], 4KB chunk

unused devices:

[~] #

[~] # fdisk -l

Disk /dev/sdd: 2000.3 GB, 2000398934016 bytes

255 heads, 63 sectors/track, 243201 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdd1 1 66 530125 83 Linux

/dev/sdd2 67 132 530142 83 Linux

/dev/sdd3 133 243138 1951945693 83 Linux

/dev/sdd4 243139 243200 498012 83 Linux

Disk /dev/sdc: 2000.3 GB, 2000398934016 bytes

255 heads, 63 sectors/track, 243201 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdc1 1 66 530125 83 Linux

/dev/sdc2 67 132 530142 83 Linux

/dev/sdc3 133 243138 1951945693 83 Linux

/dev/sdc4 243139 243200 498012 83 Linux

You must set cylinders.

You can do this from the extra functions menu.

Disk /dev/sdya: 0 MB, 0 bytes

255 heads, 63 sectors/track, 0 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdya1 1 267350 2147483647+ ee EFI GPT

Partition 1 has different physical/logical beginnings (non-Linux?):

phys=(0, 0, 1) logical=(0, 0, 2)

Partition 1 has different physical/logical endings:

phys=(1023, 254, 63) logical=(267349, 89, 4)

You must set cylinders.

You can do this from the extra functions menu.

Disk /dev/sda: 0 MB, 0 bytes

255 heads, 63 sectors/track, 0 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sda1 1 267350 2147483647+ ee EFI GPT

Partition 1 has different physical/logical beginnings (non-Linux?):

phys=(0, 0, 1) logical=(0, 0, 2)

Partition 1 has different physical/logical endings:

phys=(1023, 254, 63) logical=(267349, 89, 4)

Disk /dev/sda4: 469 MB, 469893120 bytes

2 heads, 4 sectors/track, 114720 cylinders

Units = cylinders of 8 * 512 = 4096 bytes

Disk /dev/sda4 doesn’t contain a valid partition table

Disk /dev/sdx: 515 MB, 515899392 bytes

8 heads, 32 sectors/track, 3936 cylinders

Units = cylinders of 256 * 512 = 131072 bytes

Device Boot Start End Blocks Id System

/dev/sdx1 1 17 2160 83 Linux

/dev/sdx2 18 1910 242304 83 Linux

/dev/sdx3 1911 3803 242304 83 Linux

/dev/sdx4 3804 3936 17024 5 Extended

/dev/sdx5 3804 3868 8304 83 Linux

/dev/sdx6 3869 3936 8688 83 Linux

Disk /dev/md9: 542 MB, 542769152 bytes

2 heads, 4 sectors/track, 132512 cylinders

Units = cylinders of 8 * 512 = 4096 bytes

Disk /dev/md9 doesn’t contain a valid partition table

Disk /dev/md4: 542 MB, 542769152 bytes

2 heads, 4 sectors/track, 132512 cylinders

Units = cylinders of 8 * 512 = 4096 bytes

Disk /dev/md4 doesn’t contain a valid partition table

[~] #

[~] # mdadm -E /dev/sda3

/dev/sda3:

Magic : a92b4efc

Version : 00.90.00

UUID : d46b73cf:b63f7efd:94338b81:55ba1b4a

Creation Time : Thu Dec 23 13:21:36 2010

Raid Level : raid5

Used Dev Size : 1951945600 (1861.52 GiB 1998.79 GB)

Array Size : 5855836800 (5584.56 GiB 5996.38 GB)

Raid Devices : 4

Total Devices : 4

Preferred Minor : 0

Update Time : Wed Jun 5 21:07:41 2013

State : active

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Checksum : 31506936 – correct

Events : 0.7364756

Layout : left-symmetric

Chunk Size : 64K

Number Major Minor RaidDevice State

this 4 8 3 4 spare /dev/sda3

0 0 0 0 0 removed

1 1 8 19 1 active sync /dev/sdb3

2 2 8 35 2 active sync /dev/sdc3

3 3 8 51 3 active sync /dev/sdd3

4 4 8 3 4 spare /dev/sda3

[~] #

[~] # mdadm -E /dev/sdb3

mdadm: cannot open /dev/sdb3: No such device or address

[~] #

[~] # mdadm -E /dev/sdc3

/dev/sdc3:

Magic : a92b4efc

Version : 00.90.00

UUID : d46b73cf:b63f7efd:94338b81:55ba1b4a

Creation Time : Thu Dec 23 13:21:36 2010

Raid Level : raid5

Used Dev Size : 1951945600 (1861.52 GiB 1998.79 GB)

Array Size : 5855836800 (5584.56 GiB 5996.38 GB)

Raid Devices : 4

Total Devices : 4

Preferred Minor : 0

Update Time : Wed Jun 5 21:07:41 2013

State : clean

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Checksum : 31506959 – correct

Events : 0.7364756

Layout : left-symmetric

Chunk Size : 64K

Number Major Minor RaidDevice State

this 2 8 35 2 active sync /dev/sdc3

0 0 0 0 0 removed

1 1 8 19 1 active sync /dev/sdb3

2 2 8 35 2 active sync /dev/sdc3

3 3 8 51 3 active sync /dev/sdd3

4 4 8 3 4 spare /dev/sda3

[~] #

[~] # mdadm -E /dev/sdd3

/dev/sdd3:

Magic : a92b4efc

Version : 00.90.00

UUID : d46b73cf:b63f7efd:94338b81:55ba1b4a

Creation Time : Thu Dec 23 13:21:36 2010

Raid Level : raid5

Used Dev Size : 1951945600 (1861.52 GiB 1998.79 GB)

Array Size : 5855836800 (5584.56 GiB 5996.38 GB)

Raid Devices : 4

Total Devices : 4

Preferred Minor : 0

Update Time : Wed Jun 5 21:07:41 2013

State : active

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Checksum : 3150696a – correct

Events : 0.7364756

Layout : left-symmetric

Chunk Size : 64K

Number Major Minor RaidDevice State

this 3 8 51 3 active sync /dev/sdd3

0 0 0 0 0 removed

1 1 8 19 1 active sync /dev/sdb3

2 2 8 35 2 active sync /dev/sdc3

3 3 8 51 3 active sync /dev/sdd3

4 4 8 3 4 spare /dev/sda3

[~] #

[~] # mdadm -D /dev/md0

mdadm: md device /dev/md0 does not appear to be active.

[~] #

[~] # cat /etc/raidtab

raiddev /dev/md0

raid-level 5

nr-raid-disks 4

nr-spare-disks 0

chunk-size 4

persistent-superblock 1

device /dev/sda3

raid-disk 0

device /dev/sdb3

raid-disk 1

device /dev/sdc3

raid-disk 2

device /dev/sdd3

raid-disk 3

[~] #

En proceso de reconstrucción de un raid 5 de 4 discos

[~] # more /proc/mdstat

Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [multipath]

md0 : active raid5 sda3[4] sdd3[3] sdc3[2] sdb3[1]

8786092800 blocks level 5, 64k chunk, algorithm 2 [4/3] [_UUU]

[=====>……………] recovery = 26.1% (766015884/2928697600) finish=1054.1min speed=34193K/sec

md4 : active raid1 sda2[2](S) sdd2[0] sdc2[3](S) sdb2[1]

530048 blocks [2/2] [UU]

md13 : active raid1 sda4[0] sdc4[3] sdd4[2] sdb4[1]

458880 blocks [4/4] [UUUU]

bitmap: 0/57 pages [0KB], 4KB chunk

md9 : active raid1 sda1[0] sdd1[3] sdc1[2] sdb1[1]

530048 blocks [4/4] [UUUU]

bitmap: 1/65 pages [4KB], 4KB chunk

unused devices:

[~] #

~] # fdisk -l

You must set cylinders.

You can do this from the extra functions menu.

Disk /dev/sdd: 0 MB, 0 bytes

255 heads, 63 sectors/track, 0 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdd1 1 267350 2147483647+ ee EFI GPT

Partition 1 has different physical/logical beginnings (non-Linux?):

phys=(0, 0, 1) logical=(0, 0, 2)

Partition 1 has different physical/logical endings:

phys=(1023, 254, 63) logical=(267349, 89, 4)

You must set cylinders.

You can do this from the extra functions menu.

Disk /dev/sdc: 0 MB, 0 bytes

255 heads, 63 sectors/track, 0 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdc1 1 267350 2147483647+ ee EFI GPT

Partition 1 has different physical/logical beginnings (non-Linux?):

phys=(0, 0, 1) logical=(0, 0, 2)

Partition 1 has different physical/logical endings:

phys=(1023, 254, 63) logical=(267349, 89, 4)

You must set cylinders.

You can do this from the extra functions menu.

Disk /dev/sdb: 0 MB, 0 bytes

255 heads, 63 sectors/track, 0 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 1 267350 2147483647+ ee EFI GPT

Partition 1 has different physical/logical beginnings (non-Linux?):

phys=(0, 0, 1) logical=(0, 0, 2)

Partition 1 has different physical/logical endings:

phys=(1023, 254, 63) logical=(267349, 89, 4)

Disk /dev/sdx: 515 MB, 515899392 bytes

8 heads, 32 sectors/track, 3936 cylinders

Units = cylinders of 256 * 512 = 131072 bytes

Device Boot Start End Blocks Id System

/dev/sdx1 1 17 2160 83 Linux

/dev/sdx2 18 1910 242304 83 Linux

/dev/sdx3 1911 3803 242304 83 Linux

/dev/sdx4 3804 3936 17024 5 Extended

/dev/sdx5 3804 3868 8304 83 Linux

/dev/sdx6 3869 3936 8688 83 Linux

Disk /dev/md9: 542 MB, 542769152 bytes

2 heads, 4 sectors/track, 132512 cylinders

Units = cylinders of 8 * 512 = 4096 bytes

Disk /dev/md9 doesn’t contain a valid partition table

Disk /dev/md4: 542 MB, 542769152 bytes

2 heads, 4 sectors/track, 132512 cylinders

Units = cylinders of 8 * 512 = 4096 bytes

Disk /dev/md4 doesn’t contain a valid partition table

Disk /dev/md0: 0 MB, 0 bytes

2 heads, 4 sectors/track, 0 cylinders

Units = cylinders of 8 * 512 = 4096 bytes

Disk /dev/md0 doesn’t contain a valid partition table

You must set cylinders.

You can do this from the extra functions menu.

Disk /dev/sda: 0 MB, 0 bytes

255 heads, 63 sectors/track, 0 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sda1 1 267350 2147483647+ ee EFI GPT

Partition 1 has different physical/logical beginnings (non-Linux?):

phys=(0, 0, 1) logical=(0, 0, 2)

Partition 1 has different physical/logical endings:

phys=(1023, 254, 63) logical=(267349, 89, 4)

Disk /dev/sda4: 469 MB, 469893120 bytes

2 heads, 4 sectors/track, 114720 cylinders

Units = cylinders of 8 * 512 = 4096 bytes

Disk /dev/sda4 doesn’t contain a valid partition table

[~] #

[~] # mdadm -E /dev/sda3

/dev/sda3:

Magic : a92b4efc

Version : 00.90.00

UUID : 9d56f3ad:0f6c7547:e7feb5d1:0092c6c8

Creation Time : Wed Dec 22 12:34:02 2010

Raid Level : raid5

Used Dev Size : 2928697600 (2793.02 GiB 2998.99 GB)

Array Size : 8786092800 (8379.07 GiB 8996.96 GB)

Raid Devices : 4

Total Devices : 4

Preferred Minor : 0

Update Time : Wed Jun 5 23:07:18 2013

State : clean

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Checksum : 8bd3aa41 – correct

Events : 0.17507

Layout : left-symmetric

Chunk Size : 64K

Number Major Minor RaidDevice State

this 4 8 3 4 spare /dev/sda3

0 0 0 0 0 removed

1 1 8 19 1 active sync /dev/sdb3

2 2 8 35 2 active sync /dev/sdc3

3 3 8 51 3 active sync /dev/sdd3

4 4 8 3 4 spare /dev/sda3

[~] #

[~] # mdadm -E /dev/sdb3

/dev/sdb3:

Magic : a92b4efc

Version : 00.90.00

UUID : 9d56f3ad:0f6c7547:e7feb5d1:0092c6c8

Creation Time : Wed Dec 22 12:34:02 2010

Raid Level : raid5

Used Dev Size : 2928697600 (2793.02 GiB 2998.99 GB)

Array Size : 8786092800 (8379.07 GiB 8996.96 GB)

Raid Devices : 4

Total Devices : 4

Preferred Minor : 0

Update Time : Wed Jun 5 23:18:18 2013

State : clean

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Checksum : 8bd3aed1 – correct

Events : 0.17753

Layout : left-symmetric

Chunk Size : 64K

Number Major Minor RaidDevice State

this 1 8 19 1 active sync /dev/sdb3

0 0 0 0 0 removed

1 1 8 19 1 active sync /dev/sdb3

2 2 8 35 2 active sync /dev/sdc3

3 3 8 51 3 active sync /dev/sdd3

4 4 8 3 4 spare /dev/sda3

[~] #

[~] # mdadm -E /dev/sdc3

/dev/sdc3:

Magic : a92b4efc

Version : 00.90.00

UUID : 9d56f3ad:0f6c7547:e7feb5d1:0092c6c8

Creation Time : Wed Dec 22 12:34:02 2010

Raid Level : raid5

Used Dev Size : 2928697600 (2793.02 GiB 2998.99 GB)

Array Size : 8786092800 (8379.07 GiB 8996.96 GB)

Raid Devices : 4

Total Devices : 4

Preferred Minor : 0

Update Time : Wed Jun 5 23:21:59 2013

State : clean

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Checksum : 8bd3b060 – correct

Events : 0.17833

Layout : left-symmetric

Chunk Size : 64K

Number Major Minor RaidDevice State

this 2 8 35 2 active sync /dev/sdc3

0 0 0 0 0 removed

1 1 8 19 1 active sync /dev/sdb3

2 2 8 35 2 active sync /dev/sdc3

3 3 8 51 3 active sync /dev/sdd3

4 4 8 3 4 spare /dev/sda3

[~] #

[~] # mdadm -E /dev/sdd3

/dev/sdd3:

Magic : a92b4efc

Version : 00.90.00

UUID : 9d56f3ad:0f6c7547:e7feb5d1:0092c6c8

Creation Time : Wed Dec 22 12:34:02 2010

Raid Level : raid5

Used Dev Size : 2928697600 (2793.02 GiB 2998.99 GB)

Array Size : 8786092800 (8379.07 GiB 8996.96 GB)

Raid Devices : 4

Total Devices : 4

Preferred Minor : 0

Update Time : Wed Jun 5 23:25:08 2013

State : active

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Checksum : 8bd36bc9 – correct

Events : 0.17901

Layout : left-symmetric

Chunk Size : 64K

Number Major Minor RaidDevice State

this 3 8 51 3 active sync /dev/sdd3

0 0 0 0 0 removed

1 1 8 19 1 active sync /dev/sdb3

2 2 8 35 2 active sync /dev/sdc3

3 3 8 51 3 active sync /dev/sdd3

4 4 8 3 4 spare /dev/sda3

[~] #

[~] # mdadm -D /dev/md0

/dev/md0:

Version : 00.90.03

Creation Time : Wed Dec 22 12:34:02 2010

Raid Level : raid5

Array Size : 8786092800 (8379.07 GiB 8996.96 GB)

Used Dev Size : 2928697600 (2793.02 GiB 2998.99 GB)

Raid Devices : 4

Total Devices : 4

Preferred Minor : 0

Persistence : Superblock is persistent

Update Time : Thu Jun 6 00:51:25 2013

State : clean, degraded, recovering

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 64K

Rebuild Status : 36% complete

UUID : 9d56f3ad:0f6c7547:e7feb5d1:0092c6c8

Events : 0.20114

Number Major Minor RaidDevice State

4 8 3 0 spare rebuilding /dev/sda3

1 8 19 1 active sync /dev/sdb3

2 8 35 2 active sync /dev/sdc3

3 8 51 3 active sync /dev/sdd3

[~] #

[~] # cat /etc/raidtab

raiddev /dev/md0

raid-level 5

nr-raid-disks 4

nr-spare-disks 0

chunk-size 4

persistent-superblock 1

device /dev/sda3

raid-disk 0

device /dev/sdb3

raid-disk 1

device /dev/sdc3

raid-disk 2

device /dev/sdd3

raid-disk 3

[~] #

Funcionando con raid 5 de 8 discos

[~] # more /proc/mdstat

Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [multipath]

md0 : active raid5 sda3[0] sdh3[7] sdg3[6] sdf3[5] sde3[4] sdd3[3] sdc3[2] sdb3[1]

10244987200 blocks level 5, 64k chunk, algorithm 2 [8/8] [UUUUUUUU]

md8 : active raid1 sdh2[2](S) sdg2[3](S) sdf2[4](S) sde2[5](S) sdd2[6](S) sdc2[7](S) sdb2[1] sda2[0]

530048 blocks [2/2] [UU]

md13 : active raid1 sda4[0] sdh4[7] sdg4[6] sdf4[5] sde4[4] sdd4[3] sdc4[2] sdb4[1]

458880 blocks [8/8] [UUUUUUUU]

bitmap: 0/57 pages [0KB], 4KB chunk

md9 : active raid1 sda1[0] sdh1[7] sde1[6] sdd1[5] sdg1[4] sdf1[3] sdc1[2] sdb1[1]

530048 blocks [8/8] [UUUUUUUU]

bitmap: 0/65 pages [0KB], 4KB chunk

unused devices:

[~] #

[~] # fdisk -l

Disk /dev/sde: 2000.3 GB, 2000398934016 bytes

255 heads, 63 sectors/track, 243201 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sde1 1 66 530125 83 Linux

/dev/sde2 67 132 530142 83 Linux

/dev/sde3 133 243138 1951945693 83 Linux

/dev/sde4 243139 243200 498012 83 Linux

Disk /dev/sdf: 2000.3 GB, 2000398934016 bytes

255 heads, 63 sectors/track, 243201 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdf1 1 66 530125 83 Linux

/dev/sdf2 67 132 530142 83 Linux

/dev/sdf3 133 243138 1951945693 83 Linux

/dev/sdf4 243139 243200 498012 83 Linux

Disk /dev/sdg: 2000.3 GB, 2000398934016 bytes

255 heads, 63 sectors/track, 243201 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdg1 1 66 530125 83 Linux

/dev/sdg2 67 132 530142 83 Linux

/dev/sdg3 133 243138 1951945693 83 Linux

/dev/sdg4 243139 243200 498012 83 Linux

Disk /dev/sdh: 2000.3 GB, 2000398934016 bytes

255 heads, 63 sectors/track, 243201 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdh1 1 66 530125 83 Linux

/dev/sdh2 67 132 530142 83 Linux

/dev/sdh3 133 243138 1951945693 83 Linux

/dev/sdh4 243139 243200 498012 83 Linux

Disk /dev/sdc: 2000.3 GB, 2000398934016 bytes

255 heads, 63 sectors/track, 243201 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdc1 1 66 530125 83 Linux

/dev/sdc2 67 132 530142 83 Linux

/dev/sdc3 133 243138 1951945693 83 Linux

/dev/sdc4 243139 243200 498012 83 Linux

Disk /dev/sdb: 1500.3 GB, 1500301910016 bytes

255 heads, 63 sectors/track, 182401 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 1 66 530125 83 Linux

/dev/sdb2 67 132 530142 83 Linux

/dev/sdb3 133 182338 1463569693 83 Linux

/dev/sdb4 182339 182400 498012 83 Linux

Disk /dev/sdd: 2000.3 GB, 2000398934016 bytes

255 heads, 63 sectors/track, 243201 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdd1 1 66 530125 83 Linux

/dev/sdd2 67 132 530142 83 Linux

/dev/sdd3 133 243138 1951945693 83 Linux

/dev/sdd4 243139 243200 498012 83 Linux

Disk /dev/sda: 1500.3 GB, 1500301910016 bytes

255 heads, 63 sectors/track, 182401 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sda1 1 66 530125 83 Linux

/dev/sda2 67 132 530142 83 Linux

/dev/sda3 133 182338 1463569693 83 Linux

/dev/sda4 182339 182400 498012 83 Linux

Disk /dev/sda4: 469 MB, 469893120 bytes

2 heads, 4 sectors/track, 114720 cylinders

Units = cylinders of 8 * 512 = 4096 bytes

Disk /dev/sda4 doesn’t contain a valid partition table

Disk /dev/sdx: 515 MB, 515899392 bytes

8 heads, 32 sectors/track, 3936 cylinders

Units = cylinders of 256 * 512 = 131072 bytes

Device Boot Start End Blocks Id System

/dev/sdx1 1 17 2160 83 Linux

/dev/sdx2 18 1910 242304 83 Linux

/dev/sdx3 1911 3803 242304 83 Linux

/dev/sdx4 3804 3936 17024 5 Extended

/dev/sdx5 3804 3868 8304 83 Linux

/dev/sdx6 3869 3936 8688 83 Linux

Disk /dev/md9: 542 MB, 542769152 bytes

2 heads, 4 sectors/track, 132512 cylinders

Units = cylinders of 8 * 512 = 4096 bytes

Disk /dev/md9 doesn’t contain a valid partition table

Disk /dev/md8: 542 MB, 542769152 bytes

2 heads, 4 sectors/track, 132512 cylinders

Units = cylinders of 8 * 512 = 4096 bytes

Disk /dev/md8 doesn’t contain a valid partition table

Disk /dev/md0: 10490.8 GB, 10490866892800 bytes

2 heads, 4 sectors/track, -1733720496 cylinders

Units = cylinders of 8 * 512 = 4096 bytes

Disk /dev/md0 doesn’t contain a valid partition table

[~] #

[~] # mdadm -E /dev/sda3

/dev/sda3:

Magic : a92b4efc

Version : 00.90.00

UUID : 478da556:4bba431a:8e29dce8:fdee62fd

Creation Time : Wed Mar 9 14:34:57 2011

Raid Level : raid5

Used Dev Size : 1463569600 (1395.77 GiB 1498.70 GB)

Array Size : 10244987200 (9770.38 GiB 10490.87 GB)

Raid Devices : 8

Total Devices : 8

Preferred Minor : 0

Update Time : Wed Jun 5 23:09:13 2013

State : clean

Active Devices : 8

Working Devices : 8

Failed Devices : 0

Spare Devices : 0

Checksum : c1bb0e0b – correct

Events : 0.23432693

Layout : left-symmetric

Chunk Size : 64K

Number Major Minor RaidDevice State

this 0 8 3 0 active sync /dev/sda3

0 0 8 3 0 active sync /dev/sda3

1 1 8 19 1 active sync /dev/sdb3

2 2 8 35 2 active sync /dev/sdc3

3 3 8 51 3 active sync /dev/sdd3

4 4 8 67 4 active sync /dev/sde3

5 5 8 83 5 active sync /dev/sdf3

6 6 8 99 6 active sync /dev/sdg3

7 7 8 115 7 active sync /dev/sdh3

[~] #

[~] # mdadm -E /dev/sdb3

/dev/sdb3:

Magic : a92b4efc

Version : 00.90.00

UUID : 478da556:4bba431a:8e29dce8:fdee62fd

Creation Time : Wed Mar 9 14:34:57 2011

Raid Level : raid5

Used Dev Size : 1463569600 (1395.77 GiB 1498.70 GB)

Array Size : 10244987200 (9770.38 GiB 10490.87 GB)

Raid Devices : 8

Total Devices : 8

Preferred Minor : 0

Update Time : Wed Jun 5 23:19:22 2013

State : clean

Active Devices : 8

Working Devices : 8

Failed Devices : 0

Spare Devices : 0

Checksum : c1bb107e – correct

Events : 0.23432693

Layout : left-symmetric

Chunk Size : 64K

Number Major Minor RaidDevice State

this 1 8 19 1 active sync /dev/sdb3

0 0 8 3 0 active sync /dev/sda3

1 1 8 19 1 active sync /dev/sdb3

2 2 8 35 2 active sync /dev/sdc3

3 3 8 51 3 active sync /dev/sdd3

4 4 8 67 4 active sync /dev/sde3

5 5 8 83 5 active sync /dev/sdf3

6 6 8 99 6 active sync /dev/sdg3

7 7 8 115 7 active sync /dev/sdh3

[~] #

[~] # mdadm -E /dev/sdc3

/dev/sdc3:

Magic : a92b4efc

Version : 00.90.00

UUID : 478da556:4bba431a:8e29dce8:fdee62fd

Creation Time : Wed Mar 9 14:34:57 2011

Raid Level : raid5

Used Dev Size : 1463569600 (1395.77 GiB 1498.70 GB)

Array Size : 10244987200 (9770.38 GiB 10490.87 GB)

Raid Devices : 8

Total Devices : 8

Preferred Minor : 0

Update Time : Wed Jun 5 23:22:38 2013

State : clean

Active Devices : 8

Working Devices : 8

Failed Devices : 0

Spare Devices : 0

Checksum : c1bb1154 – correct

Events : 0.23432693

Layout : left-symmetric

Chunk Size : 64K

Number Major Minor RaidDevice State

this 2 8 35 2 active sync /dev/sdc3

0 0 8 3 0 active sync /dev/sda3

1 1 8 19 1 active sync /dev/sdb3

2 2 8 35 2 active sync /dev/sdc3

3 3 8 51 3 active sync /dev/sdd3

4 4 8 67 4 active sync /dev/sde3

5 5 8 83 5 active sync /dev/sdf3

6 6 8 99 6 active sync /dev/sdg3

7 7 8 115 7 active sync /dev/sdh3

[~] #

[~] # mdadm -E /dev/sdd3

/dev/sdd3:

Magic : a92b4efc

Version : 00.90.00

UUID : 478da556:4bba431a:8e29dce8:fdee62fd

Creation Time : Wed Mar 9 14:34:57 2011

Raid Level : raid5

Used Dev Size : 1463569600 (1395.77 GiB 1498.70 GB)

Array Size : 10244987200 (9770.38 GiB 10490.87 GB)

Raid Devices : 8

Total Devices : 8

Preferred Minor : 0

Update Time : Wed Jun 5 23:25:51 2013

State : clean

Active Devices : 8

Working Devices : 8

Failed Devices : 0

Spare Devices : 0

Checksum : c1bb1227 – correct

Events : 0.23432693

Layout : left-symmetric

Chunk Size : 64K

Number Major Minor RaidDevice State

this 3 8 51 3 active sync /dev/sdd3

0 0 8 3 0 active sync /dev/sda3

1 1 8 19 1 active sync /dev/sdb3

2 2 8 35 2 active sync /dev/sdc3

3 3 8 51 3 active sync /dev/sdd3

4 4 8 67 4 active sync /dev/sde3

5 5 8 83 5 active sync /dev/sdf3

6 6 8 99 6 active sync /dev/sdg3

7 7 8 115 7 active sync /dev/sdh3

[~] #

[~] # mdadm -E /dev/sde3

/dev/sde3:

Magic : a92b4efc

Version : 00.90.00

UUID : 478da556:4bba431a:8e29dce8:fdee62fd

Creation Time : Wed Mar 9 14:34:57 2011

Raid Level : raid5

Used Dev Size : 1463569600 (1395.77 GiB 1498.70 GB)

Array Size : 10244987200 (9770.38 GiB 10490.87 GB)

Raid Devices : 8

Total Devices : 8

Preferred Minor : 0

Update Time : Wed Jun 5 23:30:42 2013

State : clean

Active Devices : 8

Working Devices : 8

Failed Devices : 0

Spare Devices : 0

Checksum : c1bb135c – correct

Events : 0.23432693

Layout : left-symmetric

Chunk Size : 64K

Number Major Minor RaidDevice State

this 4 8 67 4 active sync /dev/sde3

0 0 8 3 0 active sync /dev/sda3

1 1 8 19 1 active sync /dev/sdb3

2 2 8 35 2 active sync /dev/sdc3

3 3 8 51 3 active sync /dev/sdd3

4 4 8 67 4 active sync /dev/sde3

5 5 8 83 5 active sync /dev/sdf3

6 6 8 99 6 active sync /dev/sdg3

7 7 8 115 7 active sync /dev/sdh3

[~] #

[~] # mdadm -E /dev/sdf3

/dev/sdf3:

Magic : a92b4efc

Version : 00.90.00

UUID : 478da556:4bba431a:8e29dce8:fdee62fd

Creation Time : Wed Mar 9 14:34:57 2011

Raid Level : raid5

Used Dev Size : 1463569600 (1395.77 GiB 1498.70 GB)

Array Size : 10244987200 (9770.38 GiB 10490.87 GB)

Raid Devices : 8

Total Devices : 8

Preferred Minor : 0

Update Time : Wed Jun 5 23:31:09 2013

State : clean

Active Devices : 8

Working Devices : 8

Failed Devices : 0

Spare Devices : 0

Checksum : c1bb1389 – correct

Events : 0.23432693

Layout : left-symmetric

Chunk Size : 64K

Number Major Minor RaidDevice State

this 5 8 83 5 active sync /dev/sdf3

0 0 8 3 0 active sync /dev/sda3

1 1 8 19 1 active sync /dev/sdb3

2 2 8 35 2 active sync /dev/sdc3

3 3 8 51 3 active sync /dev/sdd3

4 4 8 67 4 active sync /dev/sde3

5 5 8 83 5 active sync /dev/sdf3

6 6 8 99 6 active sync /dev/sdg3

7 7 8 115 7 active sync /dev/sdh3

[~] #

~] # mdadm -E /dev/sdg3

/dev/sdg3:

Magic : a92b4efc

Version : 00.90.00

UUID : 478da556:4bba431a:8e29dce8:fdee62fd

Creation Time : Wed Mar 9 14:34:57 2011

Raid Level : raid5

Used Dev Size : 1463569600 (1395.77 GiB 1498.70 GB)

Array Size : 10244987200 (9770.38 GiB 10490.87 GB)

Raid Devices : 8

Total Devices : 8

Preferred Minor : 0

Update Time : Wed Jun 5 23:31:34 2013

State : clean

Active Devices : 8

Working Devices : 8

Failed Devices : 0

Spare Devices : 0

Checksum : c1bb13b4 – correct

Events : 0.23432693

Layout : left-symmetric

Chunk Size : 64K

Number Major Minor RaidDevice State

this 6 8 99 6 active sync /dev/sdg3

0 0 8 3 0 active sync /dev/sda3

1 1 8 19 1 active sync /dev/sdb3

2 2 8 35 2 active sync /dev/sdc3

3 3 8 51 3 active sync /dev/sdd3

4 4 8 67 4 active sync /dev/sde3

5 5 8 83 5 active sync /dev/sdf3

6 6 8 99 6 active sync /dev/sdg3

7 7 8 115 7 active sync /dev/sdh3

[~] #

[~] # mdadm -E /dev/sdh3

/dev/sdh3:

Magic : a92b4efc

Version : 00.90.00

UUID : 478da556:4bba431a:8e29dce8:fdee62fd

Creation Time : Wed Mar 9 14:34:57 2011

Raid Level : raid5

Used Dev Size : 1463569600 (1395.77 GiB 1498.70 GB)

Array Size : 10244987200 (9770.38 GiB 10490.87 GB)

Raid Devices : 8

Total Devices : 8

Preferred Minor : 0

Update Time : Wed Jun 5 23:31:58 2013

State : clean

Active Devices : 8

Working Devices : 8

Failed Devices : 0

Spare Devices : 0

Checksum : c1bb13de – correct

Events : 0.23432693

Layout : left-symmetric

Chunk Size : 64K

Number Major Minor RaidDevice State

this 7 8 115 7 active sync /dev/sdh3

0 0 8 3 0 active sync /dev/sda3

1 1 8 19 1 active sync /dev/sdb3

2 2 8 35 2 active sync /dev/sdc3

3 3 8 51 3 active sync /dev/sdd3

4 4 8 67 4 active sync /dev/sde3

5 5 8 83 5 active sync /dev/sdf3

6 6 8 99 6 active sync /dev/sdg3

7 7 8 115 7 active sync /dev/sdh3

[~] #

~] # mdadm -D /dev/md0

/dev/md0:

Version : 00.90.03

Creation Time : Wed Mar 9 14:34:57 2011

Raid Level : raid5

Array Size : 10244987200 (9770.38 GiB 10490.87 GB)

Used Dev Size : 1463569600 (1395.77 GiB 1498.70 GB)

Raid Devices : 8

Total Devices : 8

Preferred Minor : 0

Persistence : Superblock is persistent

Update Time : Thu Jun 6 00:52:46 2013

State : clean

Active Devices : 8

Working Devices : 8

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 64K

UUID : 478da556:4bba431a:8e29dce8:fdee62fd

Events : 0.23432693

Number Major Minor RaidDevice State

0 8 3 0 active sync /dev/sda3

1 8 19 1 active sync /dev/sdb3

2 8 35 2 active sync /dev/sdc3

3 8 51 3 active sync /dev/sdd3

4 8 67 4 active sync /dev/sde3

5 8 83 5 active sync /dev/sdf3

6 8 99 6 active sync /dev/sdg3

7 8 115 7 active sync /dev/sdh3

[~] #

[~] # cat /etc/raidtab

raiddev /dev/md0

raid-level 5

nr-raid-disks 8

nr-spare-disks 0

chunk-size 4

persistent-superblock 1

device /dev/sda3

raid-disk 0

device /dev/sdb3

raid-disk 1

device /dev/sdc3

raid-disk 2

device /dev/sdd3

raid-disk 3

device /dev/sde3

raid-disk 4

device /dev/sdf3

raid-disk 5

device /dev/sdg3

raid-disk 6

device /dev/sdh3

raid-disk 7

[~] #

Funcionando con raid 6 de 8 discos

[~] # more /proc/mdstat

Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [multipath]

md0 : active raid6 sda3[0] sdh3[7] sdg3[6] sdf3[5] sde3[4] sdd3[3] sdc3[2] sdb3[1]

11711673600 blocks level 6, 64k chunk, algorithm 2 [8/8] [UUUUUUUU]

md8 : active raid1 sdh2[2](S) sdg2[3](S) sdf2[4](S) sde2[5](S) sdd2[6](S) sdc2[7](S) sdb2[1] sda2[0]

530048 blocks [2/2] [UU]

md13 : active raid1 sda4[0] sdh4[7] sdg4[6] sdf4[5] sde4[4] sdd4[3] sdc4[2] sdb4[1]

458880 blocks [8/8] [UUUUUUUU]

bitmap: 0/57 pages [0KB], 4KB chunk

md9 : active raid1 sda1[0] sdh1[7] sdf1[6] sdg1[5] sde1[4] sdd1[3] sdc1[2] sdb1[1]

530048 blocks [8/8] [UUUUUUUU]

bitmap: 3/65 pages [12KB], 4KB chunk

unused devices:

[~] #

[~] # fdisk -l

Disk /dev/sde: 2000.3 GB, 2000398934016 bytes

255 heads, 63 sectors/track, 243201 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sde1 1 66 530125 83 Linux

/dev/sde2 67 132 530142 83 Linux

/dev/sde3 133 243138 1951945693 83 Linux

/dev/sde4 243139 243200 498012 83 Linux

Disk /dev/sdf: 2000.3 GB, 2000398934016 bytes

255 heads, 63 sectors/track, 243201 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdf1 1 66 530125 83 Linux

/dev/sdf2 67 132 530142 83 Linux

/dev/sdf3 133 243138 1951945693 83 Linux

/dev/sdf4 243139 243200 498012 83 Linux

Disk /dev/sdg: 2000.3 GB, 2000398934016 bytes

255 heads, 63 sectors/track, 243201 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdg1 1 66 530125 83 Linux

/dev/sdg2 67 132 530142 83 Linux

/dev/sdg3 133 243138 1951945693 83 Linux

/dev/sdg4 243139 243200 498012 83 Linux

Disk /dev/sdh: 2000.3 GB, 2000398934016 bytes

255 heads, 63 sectors/track, 243201 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdh1 1 66 530125 83 Linux

/dev/sdh2 67 132 530142 83 Linux

/dev/sdh3 133 243138 1951945693 83 Linux

/dev/sdh4 243139 243200 498012 83 Linux

Disk /dev/sdd: 2000.3 GB, 2000398934016 bytes

255 heads, 63 sectors/track, 243201 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdd1 1 66 530125 83 Linux

/dev/sdd2 67 132 530142 83 Linux

/dev/sdd3 133 243138 1951945693 83 Linux

/dev/sdd4 243139 243200 498012 83 Linux

Disk /dev/sdb: 2000.3 GB, 2000398934016 bytes

255 heads, 63 sectors/track, 243201 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 1 66 530125 83 Linux

/dev/sdb2 67 132 530142 83 Linux

/dev/sdb3 133 243138 1951945693 83 Linux

/dev/sdb4 243139 243200 498012 83 Linux

Disk /dev/sdc: 2000.3 GB, 2000398934016 bytes

255 heads, 63 sectors/track, 243201 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdc1 1 66 530125 83 Linux

/dev/sdc2 67 132 530142 83 Linux

/dev/sdc3 133 243138 1951945693 83 Linux

/dev/sdc4 243139 243200 498012 83 Linux

Disk /dev/sda: 2000.3 GB, 2000398934016 bytes

255 heads, 63 sectors/track, 243201 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sda1 1 66 530125 83 Linux

/dev/sda2 67 132 530142 83 Linux

/dev/sda3 133 243138 1951945693 83 Linux

/dev/sda4 243139 243200 498012 83 Linux

Disk /dev/sda4: 469 MB, 469893120 bytes

2 heads, 4 sectors/track, 114720 cylinders

Units = cylinders of 8 * 512 = 4096 bytes

Disk /dev/sda4 doesn’t contain a valid partition table

Disk /dev/sdx: 515 MB, 515899392 bytes

8 heads, 32 sectors/track, 3936 cylinders

Units = cylinders of 256 * 512 = 131072 bytes

Device Boot Start End Blocks Id System

/dev/sdx1 1 17 2160 83 Linux

/dev/sdx2 18 1910 242304 83 Linux

/dev/sdx3 1911 3803 242304 83 Linux

/dev/sdx4 3804 3936 17024 5 Extended

/dev/sdx5 3804 3868 8304 83 Linux

/dev/sdx6 3869 3936 8688 83 Linux

Disk /dev/md9: 542 MB, 542769152 bytes

2 heads, 4 sectors/track, 132512 cylinders

Units = cylinders of 8 * 512 = 4096 bytes

Disk /dev/md9 doesn’t contain a valid partition table

Disk /dev/md8: 542 MB, 542769152 bytes

2 heads, 4 sectors/track, 132512 cylinders

Units = cylinders of 8 * 512 = 4096 bytes

Disk /dev/md8 doesn’t contain a valid partition table

Disk /dev/md0: 11992.7 GB, 11992753766400 bytes

2 heads, 4 sectors/track, -1367048896 cylinders

Units = cylinders of 8 * 512 = 4096 bytes

Disk /dev/md0 doesn’t contain a valid partition table

[~] #

[~] # mdadm -E /dev/sda3

/dev/sda3:

Magic : a92b4efc

Version : 00.90.00

UUID : fd2c41b5:17649ac4:1e10b251:f1136e44

Creation Time : Wed Dec 12 04:34:21 2012

Raid Level : raid6

Used Dev Size : 1951945600 (1861.52 GiB 1998.79 GB)

Array Size : 11711673600 (11169.12 GiB 11992.75 GB)

Raid Devices : 8

Total Devices : 8

Preferred Minor : 0

Update Time : Wed Jun 5 23:10:11 2013

State : clean

Active Devices : 8

Working Devices : 8

Failed Devices : 0

Spare Devices : 0

Checksum : e424ef27 – correct

Events : 0.3789835

Chunk Size : 64K

Number Major Minor RaidDevice State

this 0 8 3 0 active sync /dev/sda3

0 0 8 3 0 active sync /dev/sda3

1 1 8 19 1 active sync /dev/sdb3

2 2 8 35 2 active sync /dev/sdc3

3 3 8 51 3 active sync /dev/sdd3

4 4 8 67 4 active sync /dev/sde3

5 5 8 83 5 active sync /dev/sdf3

6 6 8 99 6 active sync /dev/sdg3

7 7 8 115 7 active sync /dev/sdh3

[~] #

[~] # mdadm -E /dev/sdb3

/dev/sdb3:

Magic : a92b4efc

Version : 00.90.00

UUID : fd2c41b5:17649ac4:1e10b251:f1136e44

Creation Time : Wed Dec 12 04:34:21 2012

Raid Level : raid6

Used Dev Size : 1951945600 (1861.52 GiB 1998.79 GB)

Array Size : 11711673600 (11169.12 GiB 11992.75 GB)

Raid Devices : 8

Total Devices : 8

Preferred Minor : 0

Update Time : Wed Jun 5 23:16:04 2013

State : clean

Active Devices : 8

Working Devices : 8

Failed Devices : 0

Spare Devices : 0

Checksum : e424f09a – correct

Events : 0.3789835

Chunk Size : 64K

Number Major Minor RaidDevice State

this 1 8 19 1 active sync /dev/sdb3

0 0 8 3 0 active sync /dev/sda3

1 1 8 19 1 active sync /dev/sdb3

2 2 8 35 2 active sync /dev/sdc3

3 3 8 51 3 active sync /dev/sdd3

4 4 8 67 4 active sync /dev/sde3

5 5 8 83 5 active sync /dev/sdf3

6 6 8 99 6 active sync /dev/sdg3

7 7 8 115 7 active sync /dev/sdh3

[~] #

[~] # mdadm -E /dev/sdc3

/dev/sdc3:

Magic : a92b4efc

Version : 00.90.00

UUID : fd2c41b5:17649ac4:1e10b251:f1136e44

Creation Time : Wed Dec 12 04:34:21 2012

Raid Level : raid6

Used Dev Size : 1951945600 (1861.52 GiB 1998.79 GB)

Array Size : 11711673600 (11169.12 GiB 11992.75 GB)

Raid Devices : 8

Total Devices : 8

Preferred Minor : 0

Update Time : Wed Jun 5 23:23:14 2013

State : clean

Active Devices : 8

Working Devices : 8

Failed Devices : 0

Spare Devices : 0

Checksum : e424f25a – correct

Events : 0.3789835

Chunk Size : 64K

Number Major Minor RaidDevice State

this 2 8 35 2 active sync /dev/sdc3

0 0 8 3 0 active sync /dev/sda3

1 1 8 19 1 active sync /dev/sdb3

2 2 8 35 2 active sync /dev/sdc3

3 3 8 51 3 active sync /dev/sdd3

4 4 8 67 4 active sync /dev/sde3

5 5 8 83 5 active sync /dev/sdf3

6 6 8 99 6 active sync /dev/sdg3

7 7 8 115 7 active sync /dev/sdh3

[~] #

[~] # mdadm -E /dev/sdd3

/dev/sdd3:

Magic : a92b4efc

Version : 00.90.00

UUID : fd2c41b5:17649ac4:1e10b251:f1136e44

Creation Time : Wed Dec 12 04:34:21 2012

Raid Level : raid6

Used Dev Size : 1951945600 (1861.52 GiB 1998.79 GB)

Array Size : 11711673600 (11169.12 GiB 11992.75 GB)

Raid Devices : 8

Total Devices : 8

Preferred Minor : 0

Update Time : Wed Jun 5 23:26:29 2013

State : clean

Active Devices : 8

Working Devices : 8

Failed Devices : 0

Spare Devices : 0

Checksum : e424f32f – correct

Events : 0.3789835

Chunk Size : 64K

Number Major Minor RaidDevice State

this 3 8 51 3 active sync /dev/sdd3

0 0 8 3 0 active sync /dev/sda3

1 1 8 19 1 active sync /dev/sdb3

2 2 8 35 2 active sync /dev/sdc3

3 3 8 51 3 active sync /dev/sdd3

4 4 8 67 4 active sync /dev/sde3

5 5 8 83 5 active sync /dev/sdf3

6 6 8 99 6 active sync /dev/sdg3

7 7 8 115 7 active sync /dev/sdh3

[~] #

[~] # mdadm -E /dev/sde3

/dev/sde3:

Magic : a92b4efc

Version : 00.90.00

UUID : fd2c41b5:17649ac4:1e10b251:f1136e44

Creation Time : Wed Dec 12 04:34:21 2012

Raid Level : raid6

Used Dev Size : 1951945600 (1861.52 GiB 1998.79 GB)

Array Size : 11711673600 (11169.12 GiB 11992.75 GB)

Raid Devices : 8

Total Devices : 8

Preferred Minor : 0

Update Time : Wed Jun 5 23:33:04 2013

State : clean

Active Devices : 8

Working Devices : 8

Failed Devices : 0

Spare Devices : 0

Checksum : e424f4cc – correct

Events : 0.3789835

Chunk Size : 64K

Number Major Minor RaidDevice State

this 4 8 67 4 active sync /dev/sde3

0 0 8 3 0 active sync /dev/sda3

1 1 8 19 1 active sync /dev/sdb3

2 2 8 35 2 active sync /dev/sdc3

3 3 8 51 3 active sync /dev/sdd3

4 4 8 67 4 active sync /dev/sde3

5 5 8 83 5 active sync /dev/sdf3

6 6 8 99 6 active sync /dev/sdg3

7 7 8 115 7 active sync /dev/sdh3

[~] #