QNAP TS-212 How to rebuild RAID manually from telnet

Scenario = replace a disk in QNAP TS-212 with RAID 1 configuration active

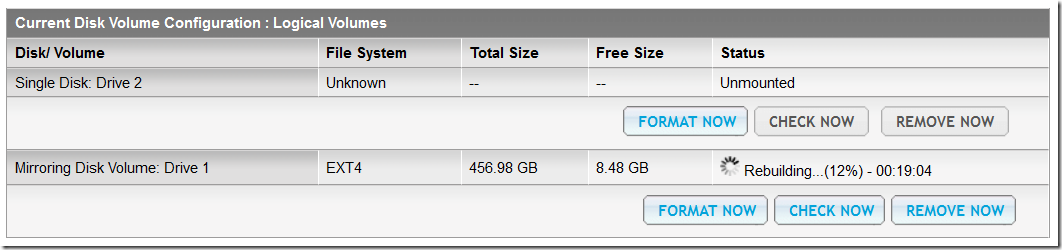

RAID rebuild should start automatically, but some times it could happen you got stuck with 1 Single Disk + 1 Mirroring Disk Volume:

According to the QNAP Support – How can I migrate from Single Disk to RAID 0/1 in TS-210/TS-212? , TS-210/TS-212 does not support Online RAID Level Migration. Therefore, please backup the data on the single disk to another location, install the second hard drive, and then recreate the new RAID 0/1 array (hard drive must be formatted).

The workaround is this:

- Telnet to NAS as Admin

- Check your current disk configuration for Disk #1 and Disk #2 =

fdisk -l /dev/sda

Disk /dev/sdb: 1000.2 GB, 1000204886016 bytes

255 heads, 63 sectors/track, 121601 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytesDevice Boot Start End Blocks Id System

/dev/sdb1 1 66 530125 83 Linux

/dev/sdb2 67 132 530142 83 Linux

/dev/sdb3 133 121538 975193693 83 Linux

/dev/sdb4 121539 121600 498012 83 Linuxfdisk -l /dev/sdb

Disk /dev/sda: 1000.2 GB, 1000204886016 bytes

255 heads, 63 sectors/track, 121601 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytesDevice Boot Start End Blocks Id System

/dev/sda1 1 66 530125 83 Linux

/dev/sda2 67 132 530142 83 Linux

/dev/sda3 133 121538 975193693 83 Linux

/dev/sda4 121539 121600 498012 83 Linux - SDA is the first disk, SDB is the second disk

- Verify the current status of RAID with this command =

mdadm –detail /dev/md0

/dev/md0:

Version : 00.90.03

Creation Time : Thu Sep 22 21:50:34 2011

Raid Level : raid1

Array Size : 486817600 (464.27 GiB 498.50 GB)

Used Dev Size : 486817600 (464.27 GiB 498.50 GB)

Raid Devices : 2

Total Devices : 1

Preferred Minor : 0

Persistence : Superblock is persistentIntent Bitmap : InternalUpdate Time : Thu Jul 19 01:13:58 2012

State : active, degraded

Active Devices : 1

Working Devices : 1

Failed Devices : 0

Spare Devices : 0UUID : 72cc06ac:570e3bf8:427adef1:e13f1b03

Events : 0.1879365Number Major Minor RaidDevice State

0 0 0 0 removed

1 8 3 1 active sync /dev/sda3 - As you can see the /dev/sda3 is working, so disk #1 is OK, but disk #2 is missing from RAID

- Check if Disk #2 /dev/sdb is mounted (it should be) =

mount/proc on /proc type proc (rw)

none on /dev/pts type devpts (rw,gid=5,mode=620)

sysfs on /sys type sysfs (rw)

tmpfs on /tmp type tmpfs (rw,size=32M)

none on /proc/bus/usb type usbfs (rw)

/dev/sda4 on /mnt/ext type ext3 (rw)

/dev/md9 on /mnt/HDA_ROOT type ext3 (rw)

/dev/md0 on /share/MD0_DATA type ext4 (rw,usrjquota=aquota.user,jqfmt=vfsv0,user_xattr,data=ordered,delalloc,noacl)

tmpfs on /var/syslog_maildir type tmpfs (rw,size=8M)

/dev/sdt1 on /share/external/sdt1 type ufsd (rw,iocharset=utf8,dmask=0000,fmask=0111,force)

tmpfs on /.eaccelerator.tmp type tmpfs (rw,size=32M)

/dev/sdb3 on /share/HDB_DATA type ext3 (rw,usrjquota=aquota.user,jqfmt=vfsv0,user_xattr,data=ordered,noacl)

- Dismount the /dev/sdb3 Disk #2 with this command =

umount /dev/sdb3 - Add Disk #2 into the RAID /dev/md0 =

mdadm /dev/md0 –add /dev/sdb3

mdadm: added /dev/sdb3 - Check the RAID status and the rebuild should be started automatically =

mdadm –detail /dev/md0

/dev/md0:

Version : 00.90.03

Creation Time : Thu Sep 22 21:50:34 2011

Raid Level : raid1

Array Size : 486817600 (464.27 GiB 498.50 GB)

Used Dev Size : 486817600 (464.27 GiB 498.50 GB)

Raid Devices : 2

Total Devices : 2

Preferred Minor : 0

Persistence : Superblock is persistentIntent Bitmap : InternalUpdate Time : Thu Jul 19 01:30:27 2012

State : active, degraded, recovering

Active Devices : 1

Working Devices : 2

Failed Devices : 0

Spare Devices : 1Rebuild Status : 0% complete

UUID : 72cc06ac:570e3bf8:427adef1:e13f1b03

Events : 0.1879848Number Major Minor RaidDevice State

2 8 19 0 spare rebuilding /dev/sdb3

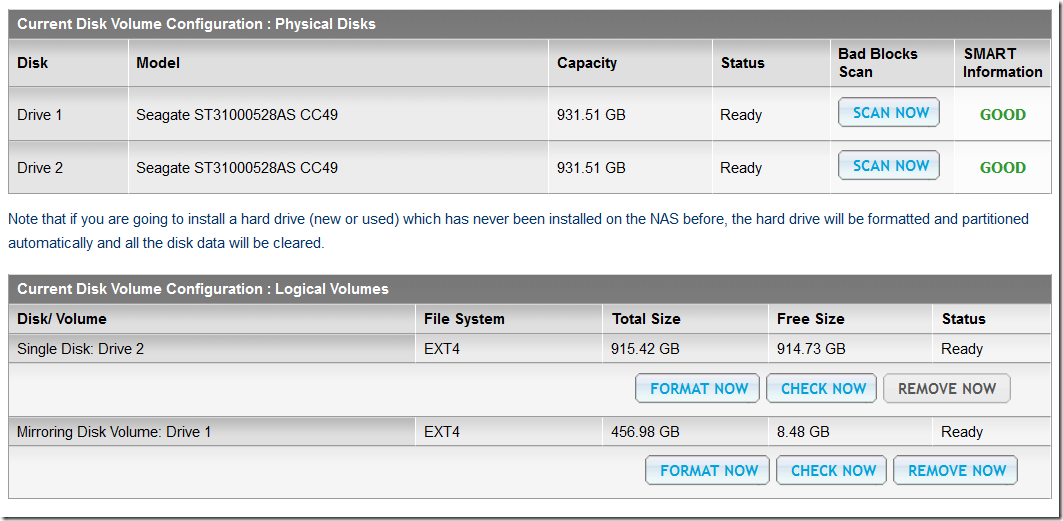

1 8 3 1 active sync /dev/sda3 - Check the NAS site for the rebuild % progress

- After the RAID rebuild complete, restart NAS to clean all previous mount point folder for sdb3

Obtained from this link

Los comentarios están cerrados.